Planning with Unified Multimodal Models

Abstract

With the powerful reasoning capabilities of large language models (LLMs) and vision-language models (VLMs), many recent works have explored using them for decision-making. However, most of these approaches rely solely on language-based reasoning, which limits their ability to reason and make informed decisions. Recently, a promising new direction has emerged with unified multimodal models (UMMs), which support both multimodal inputs and outputs. We believe such models have greater potential for decision-making by enabling reasoning through generated visual content. To this end, we propose Uni-Plan, a planning framework built on UMMs. Within this framework, a single model simultaneously serves as the policy, dynamics model, and value function. In addition, to avoid hallucinations in dynamics predictions, we present a novel approach self-discriminated filtering, where the generative model serves as a self-discriminator to filter out invalid dynamics predictions. Experiments on long-horizon planning tasks show that Uni-Plan substantially improves success rates compared to VLM-based methods, while also showing strong data scalability, requiring no expert demonstrations and achieving better performance under the same training-data size. This work lays a foundation for future research in reasoning and decision-making with UMMs.

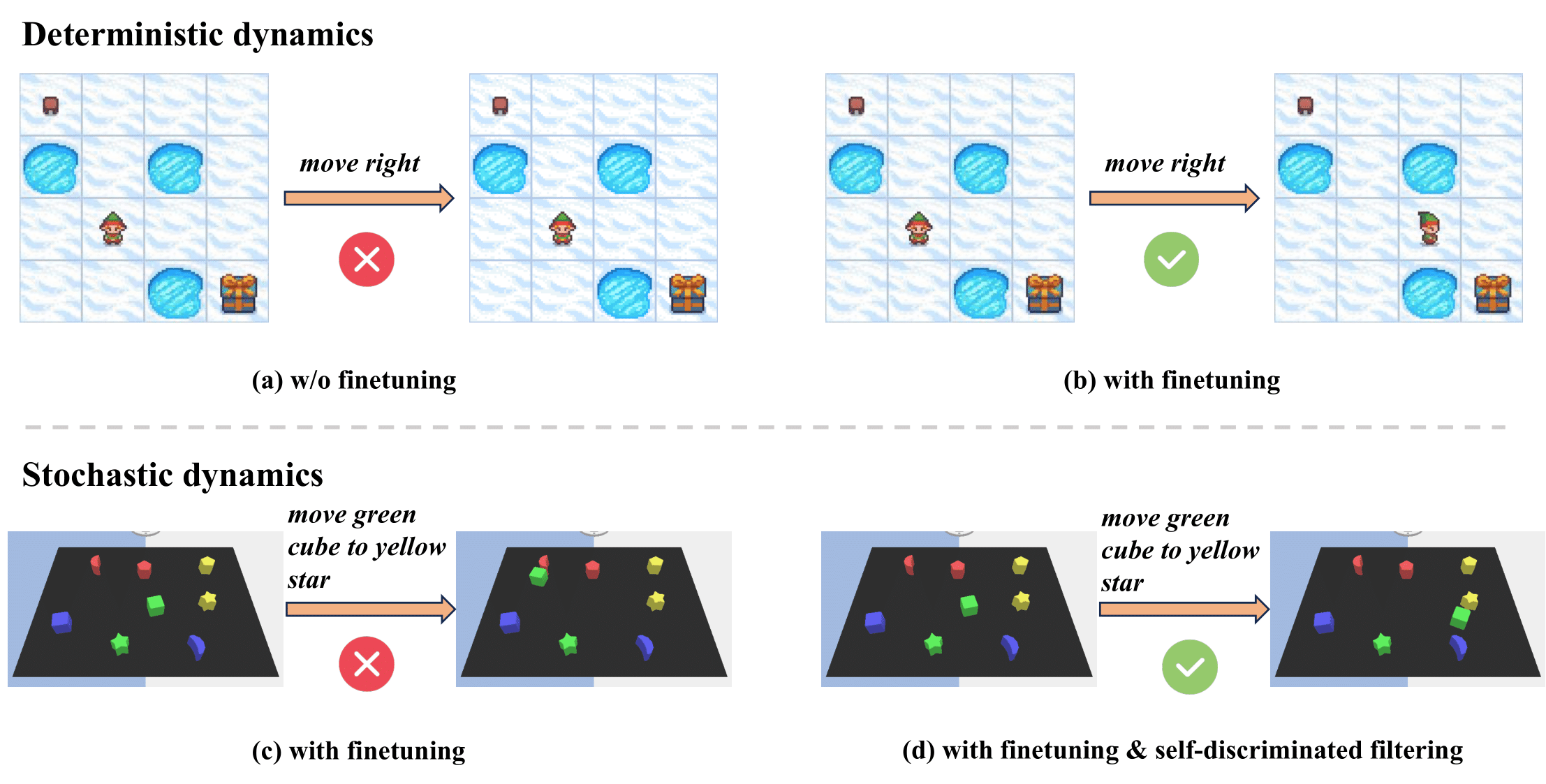

UMM as dynamics models

Off-the-shelf UMMs usually fail to be a faithful dynamics model. This can be mitigated by finetuning for simple tasks with deterministic dynamics, but not enough for complex tasks with stochastic dynamics.

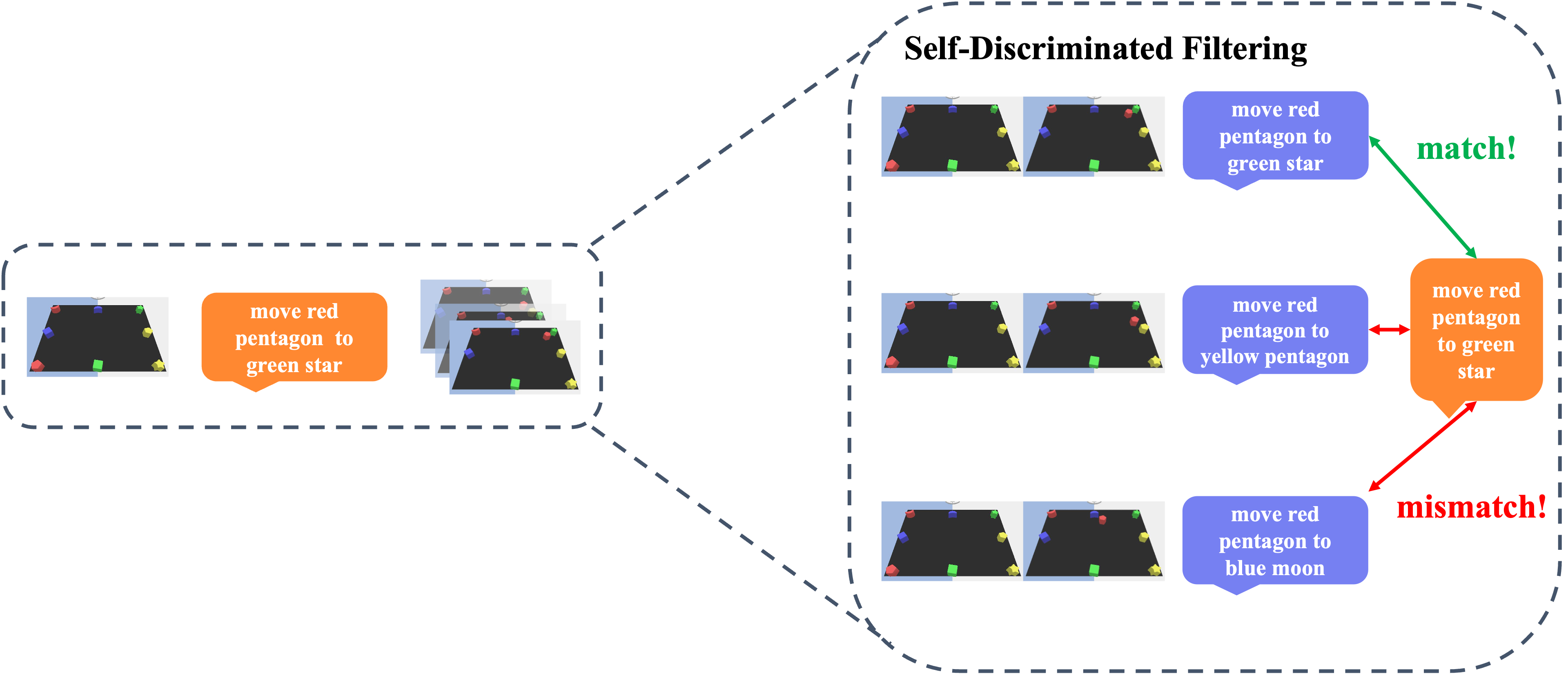

To this end, we propose a novel approach self-discriminated filtering, where the generative model serves as a self-discriminator to filter out invalid dynamics predictions.

More specifically, the UMM will perform inverse dynamics inference to predict the textual action that describes the transition between two observations. By comparing the predicted action with the ground-truth action, we can filter out invalid dynamics predictions.

Illustration of self-discriminated filtering

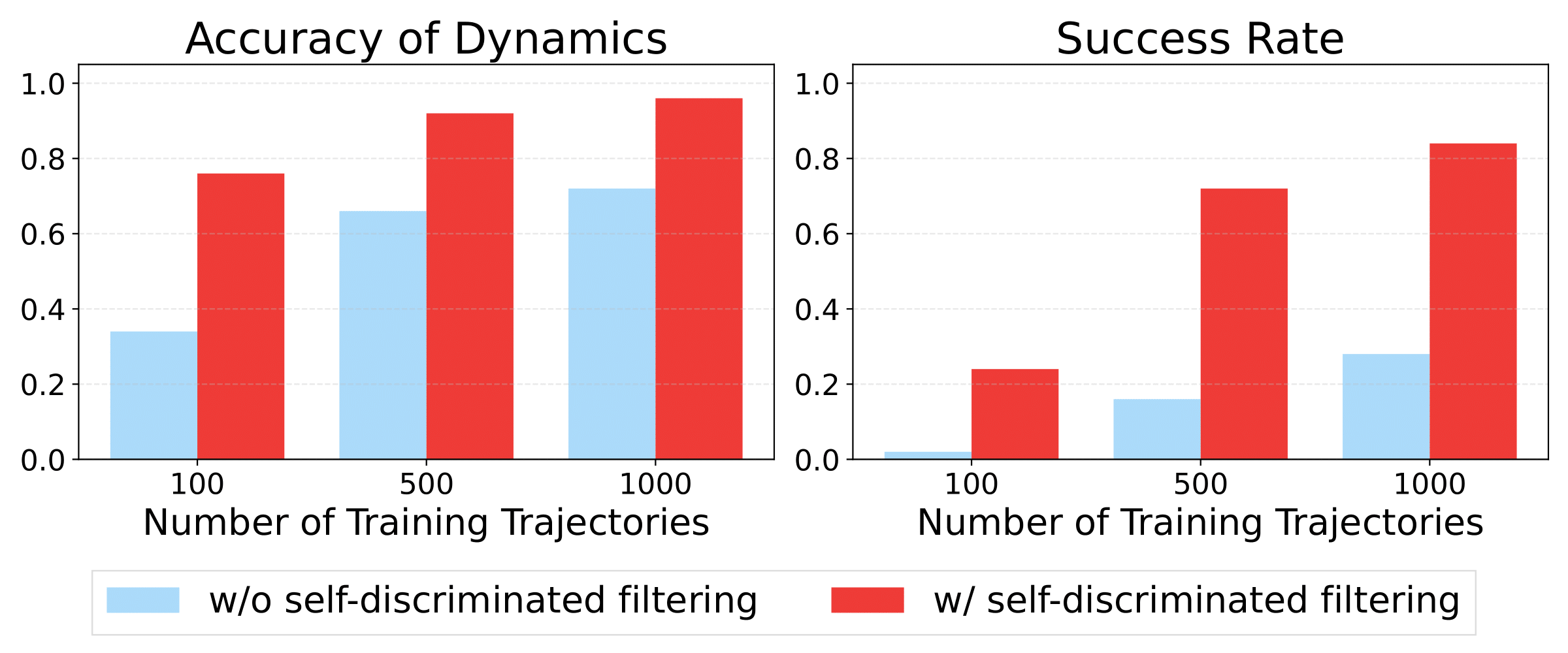

Ablation study of self-discriminated filtering

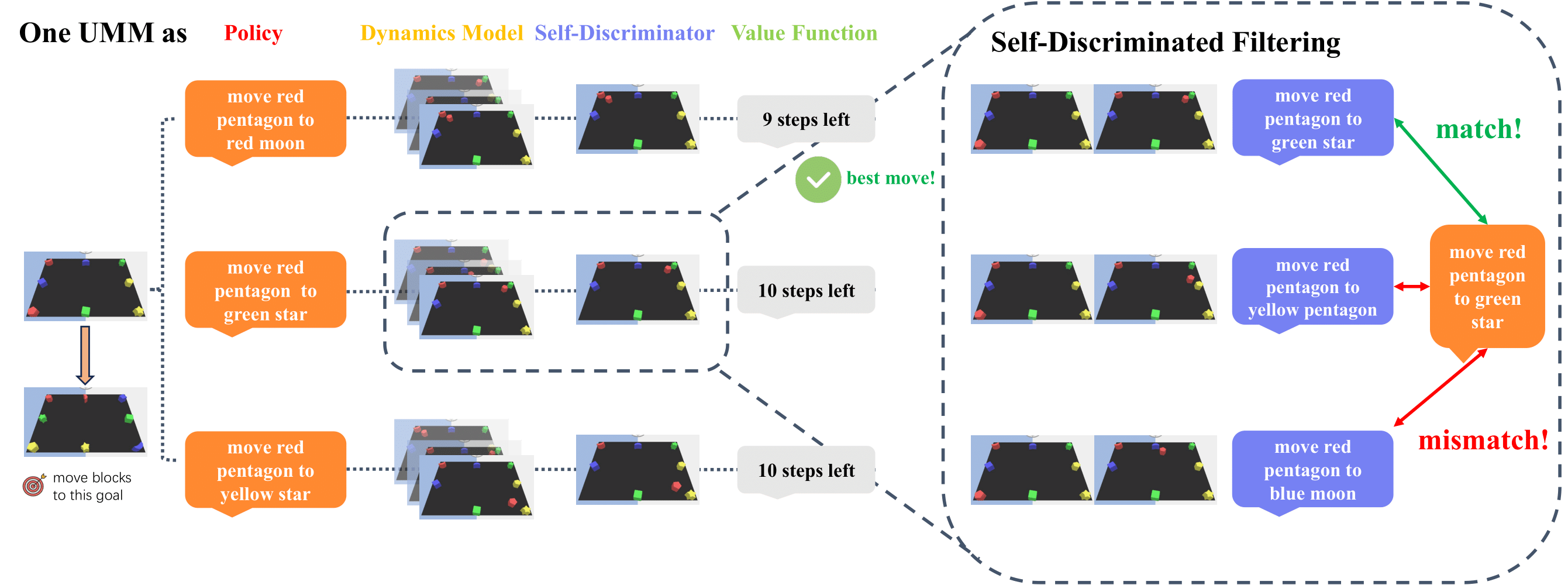

Uni-Plan: A unified planning framework with one single UMM

Uni-Plan uses a single UMM to serve as the policy, dynamics model, self-discriminator, and value function, allowing for a unified planning framework.

FrozenLake

Mini-BEHAVIOR

Language Table

How Uni-Plan performs better than VLM-based planning methods

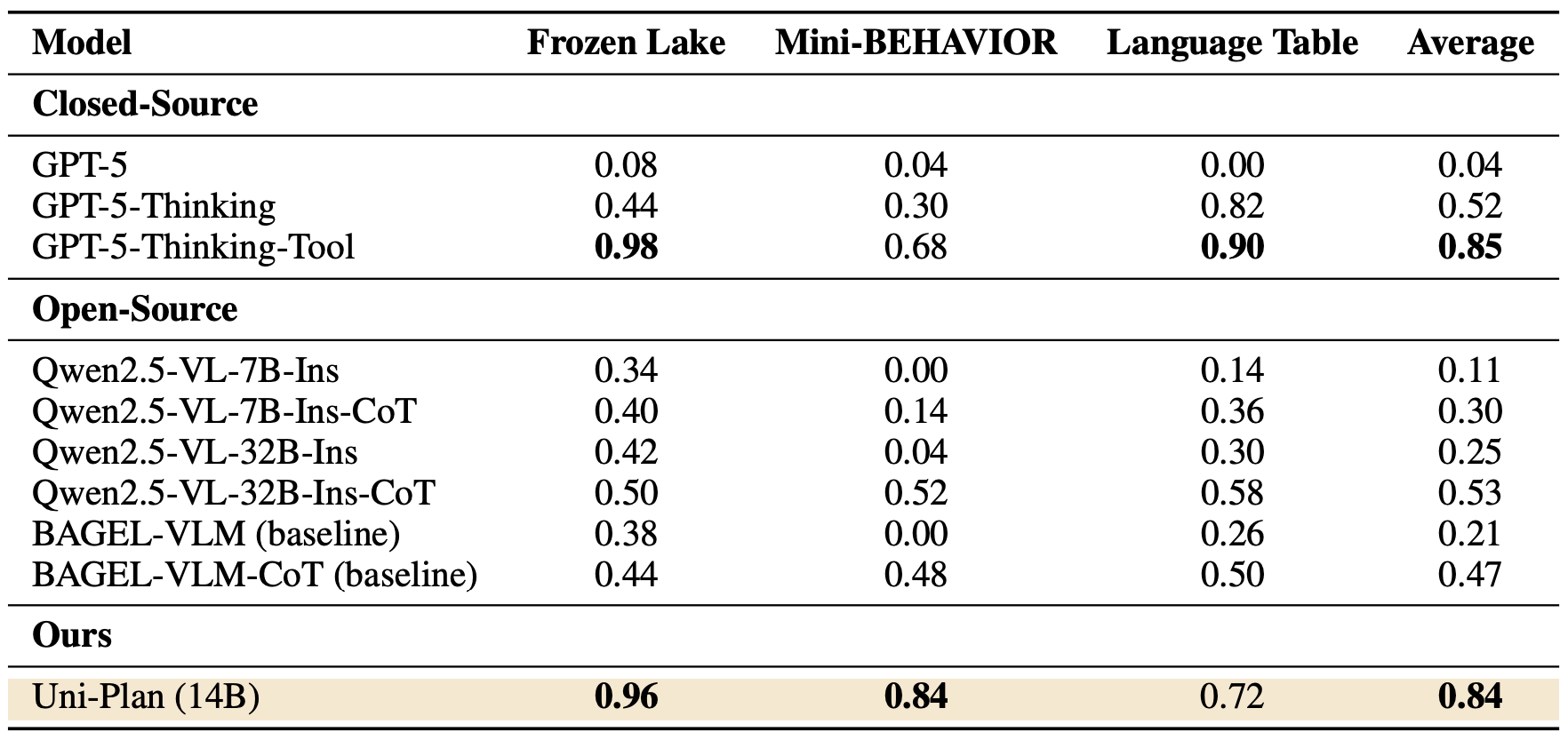

Advantage1: Uni-Plan achieves higher success rates in long-horizon planning tasks.

Table shows the planning success rates of different methods where Uni-Plan outperforms those open-source VLMs by a large margin and is also comparable to the advanced closed-source model, i.e., GPT5-Thinking-Tool.

Table: Planning success rates of different methods

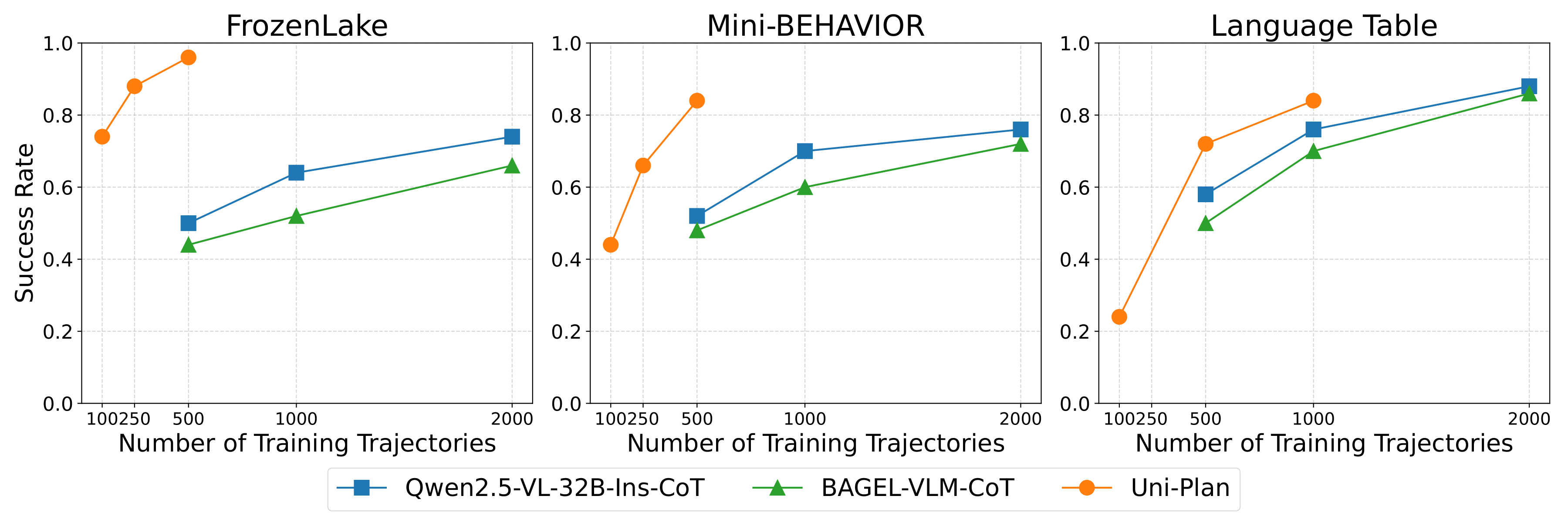

Advantage2: Uni-Plan has more favorable data scalability.

The finetuning of Uni-Plan requires no expert demonstrations, while the finetuning of VLM-based methods needs expert demonstrations. Despite relying only on non-expert data, Uni-Plan consistently achieves higher performance with fewer training data.

Data scalability

Foundation model

We use BAGEL as the foundation UMM in this work.

Bibtex

@misc{

sun2025planningunifiedmultimodalmodels,

title={Planning with Unified Multimodal Models},

author={Yihao Sun and Zhilong Zhang and Yang Yu and Pierre-Luc Bacon},

year={2025},

eprint={2509.23014},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2509.23014},

}

1Mila - Quebec AI Institute

1Mila - Quebec AI Institute

2Université de Montréal

2Université de Montréal

3Nanjing University

3Nanjing University